How to Plan and Execute Software Projects 🚢

A no-nonsense guide to build the right things and build them on time.

Q4 is coming, which for many teams means planning projects for the next year.

Are these plans usually successful? Do you meet your deadlines? If you are anything like me, your track record on this might be… complicated.

Software projects of any kind — new features, internal tools, automations — can usually fail in two main ways:

🙅♂️ You built the wrong thing — everything goes according to the plan, but it turns out the plan was wrong. Users don’t care about the new feature, or the new internal tool doesn’t solve your co-workers’ problems.

⌛ It took too long — the project was due in three months, but it took seven to get things done. Scope creep? Wrong estimates? Or is it just the nature of engineering?

Executing software projects right is kind of an art. During these years, thanks to the newsletter community, I had the chance to meet incredible tech leaders who delivered extraordinary feats, and I learned a lot from them.

To write this piece, I went back to my chats with Aadil, who led programs at Google, Apple, and delivered the insanely complex Humane AI Pin; with James, who designed and delivered the multi-exabyte Dropbox storage system; with Thiago, Rado, and many more.

This edition puts together the best ideas that I learned from tens of such leaders, combined with my personal experience, with the goal of giving you a compact framework for executing projects right.

Here is the agenda:

📏 Scoping — how to approach estimates, negotiations, and budgets.

🗿 Milestones — how to design checkpoints to remove uncertainty and create progress.

🚀 Executing — how to stay on track on long projects, with divergence and convergence.

⏱️ How much time you have — how to take the rest of your work into account.

Let’s dive in!

📏 Scoping

Over time I have realized that when somebody — a product person, a CEO, whoever — comes up with a product idea, they probably have a rough timeframe already in the back of their mind.

They don’t think their idea is worthwhile at any cost — they think it would be e.g. a nice experiment if delivered in three months. Three months isn’t just a time here, it is an actual cost: it is three months multiplied by the salaries of the people on the team.

Projects only make sense if they fit a budget — which the Basecamp guys call appetite. The appetite for a project largely exists upfront, and is inseparable from the idea itself.

I have found that most issues with estimates, negotiations, and fighting between stakeholders, arise from such an appetite not being visible to the team, and not being treated for what it is: a constraint.

Famously, IKEA designs furniture by creating the price tag upfront. They don’t design a chair first and ask manufacture what it would cost later. Instead, they know e.g. that there aren’t many good office chairs for <€200, and that there are people in the market for those. So they ask: what can we ship at that price point? What can we include? What can we give up?

You can treat software projects like IKEA chairs. How can we solve this problem in one month? How can we design a solution that works within this tight scope?

Now, for small-ish initiatives that take up to a few weeks, I have found that getting this conversation right is 80% of the work. You align people’s expectations, make constraints visible, figure out a reasonable scope, and go for it.

For large projects, instead, things are trickier.

Appetite might be unclear. Sure, if we are right about the need/demand for this, a year of development might be well spent, but 1) we don’t know if we are right, and 2) is a full year really needed?

Enter milestones 👇

🗿 Milestones

Large projects are plagued by uncertainty, which we react to by creating milestones. But what are milestones useful for? What is a good milestone / bad milestone?

There is a metaphor that I have used many other times, which is about the three types of knowledge:

Known knowns — e.g. we want to make users leave comments below posts.

Known unknowns — e.g. will people use this? Can we find an open source library for basic social stuff?

Unknown unknowns — e.g. it turns out we need to rethink db encryption!

What is the impact of these knowns and unknowns? To understand this, let’s think backwards: what would you do if you could magically see the future and clear all the unknowns? Say you know everything for certain: technical feasibility, the features users want and don’t want, the best design approach, etc.

If you knew everything for a fact, you would probably just jump into a long, uninterrupted batch of work, until the product is ready for release. The less learning needs to happen, the more work you can commit to.

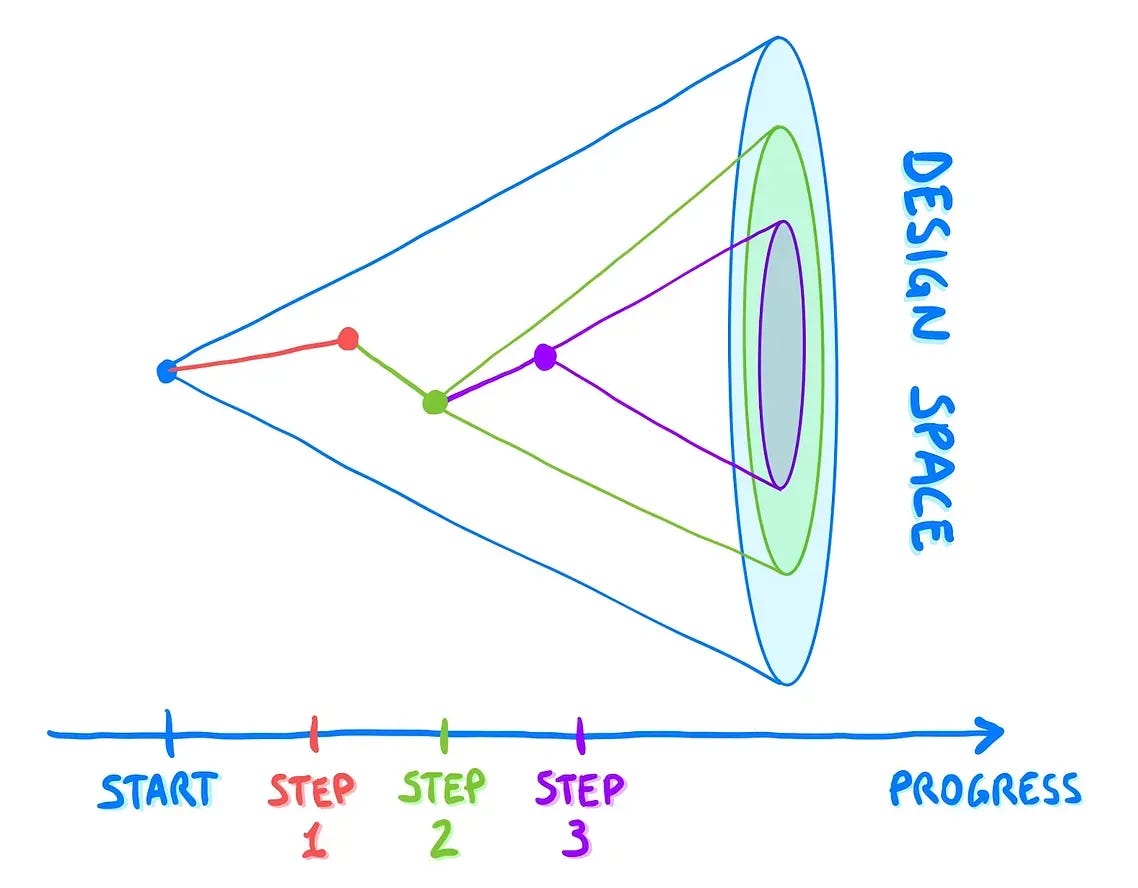

So, for any project, you want to remove unknowns as fast as possible, so you can commit to more and more work. Under this light, the North Star question becomes: what is holding our team from getting into a longer commitment for this project, with confidence?

That’s where milestones come into play. A good milestone:

Reduces uncertainty — there are less unknowns left, which unlocks longer commitment.

Moves the project forward — we have less things left to do.

Preserves design space — retains the agility to evolve the design in multiple ways.

So let’s make an example: say we are developing an internal tool for helping with the workflows of some co-workers. Unknowns here aren’t much about technical challenges, but rather about building the right thing:

A bad milestone — might be to start with frontend scaffolding. It doesn’t reduce uncertainty, while instead it commits the team to the specific frontend tech, reducing the design space and the potential for acting on learnings down the line.

A better milestone — might be to develop a basic version with a low-code tool like Retool or Airtable. With this you can reduce the uncertainty about the use cases, but you are still committing to a specific tech, and you are not moving the project forward in case you have to scrap it to make something custom.

An even better milestone — could be to develop some scripts that do the job and make people try them from command line under your supervision, and/or develop simple, throwaway interfaces on top with a no-code tool. So you can validate use cases, you are not committing to anything special, and scripts move the project forward in case of success.

A good litmus test for a milestone is whether you may discover that you can stop the project there. Ask yourself: is there a chance — however little — that this milestone does the job and we are done? E.g. you learn you don’t need those additional features, or that complex design for scale. If so, then that’s a good milestone.

So, let’s say you have scoped your project right, people are onboard with your estimates, you have already released a successful milestone, and secured four more months of work to take things to the next level.

How do you stay on track?

🚀 Executing

When executing a project on a few months timeframe, there are two things I pay the most attention to: 1) Working in small iterations, and 2) Divergence & convergence.

Let’s look at both.